In the rapidly evolving landscape of artificial intelligence (AI), businesses are faced with a multitude of risks that encompass legal implications, operational challenges, and the crucial task of building trust in AI models. As AI technologies continue to advance, it becomes increasingly essential for organizations to understand and mitigate these risks effectively.

Legal Risks

Growing List of Legal Implications

The legal landscape surrounding AI is continuously expanding, with regulations and laws emerging to address various concerns. Recent developments such as the EU AI Act, the New York Hiring Bias Law, and Executive Orders from the White House on Gen AI highlight the increasing attention given to AI governance and accountability. As AI becomes more integrated into society, the number of legal considerations will undoubtedly continue to grow.

Reputation Risks

Brand Matters

The reputation of a business can be significantly impacted by AI-related incidents. Issues such as loss of productivity, fines due to regulatory non-compliance, unintentional disclosure of personally identifiable information (PII), or exposure of trade secrets can tarnish a company’s brand and erode consumer trust. It’s imperative for organizations to prioritize ethical AI practices to safeguard their reputation.

Operational Risks

Loss of Productivity and Regulatory Fines

Operational risks associated with AI include the loss of productivity due to model malfunctions or errors, as well as the potential for substantial fines resulting from non-compliance with regulations. Unintentional exposure of PII or trade secrets can also lead to severe consequences, emphasizing the importance of robust operational controls.

How to Build Trust in AI Models

There are five key things, you need to focus for building Trusted AI Models

Scope

Understanding the scope of AI models is crucial for managing expectations and mitigating risks. Organizations must clearly define what the model can and cannot do. For instance, an AI chatbot may not be suitable for providing pricing information or providing crucial health care advice where human life is on stack and should redirect users to human agents for such inquiries.

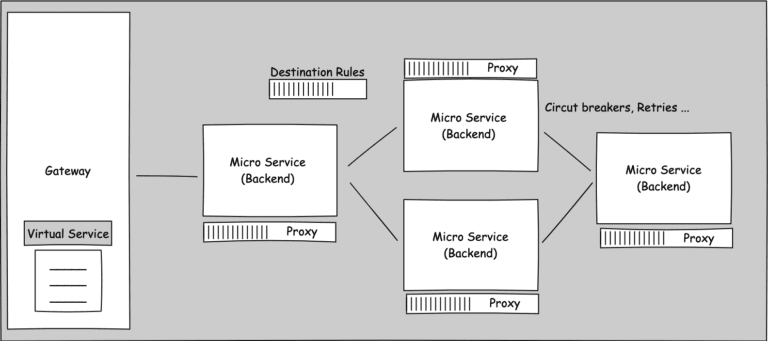

Foundation

Building trust in AI models requires a solid foundation built on transparency and understanding. Organisations should be knowledgeable about the type of model being used, the data it relies on, and its architecture. Tools like Model Cards can aid in documenting essential information about the model’s development and usage.

Governance

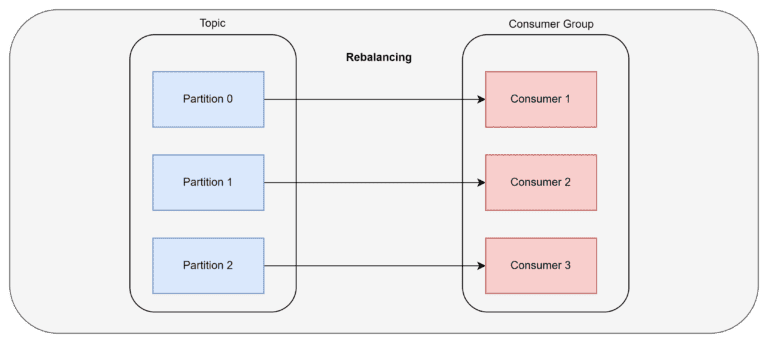

Establishing robust governance practices ensures accountability throughout the AI model lifecycle. This includes documenting the training process, monitoring for biases and hallunications, documenting who is doing what, tracking changes and controlling version to understand any changes made to the model over time.

Monitoring Risks

Continuous monitoring of AI models is essential for detecting and mitigating risks. Organisations should track performance metrics specific to bias and hallucinations. Organisations can automating the monitoring process where possible to ensure ongoing compliance with regulatory requirements.

Compliance

Mapping AI models directly to regulatory requirements helps ensure compliance and mitigate legal risks. By linking different parts of the model to specific regulations, organizations can quickly adapt to changes in requirements and adjust their models accordingly to avoid penalties.

In conclusion, navigating AI risks requires a comprehensive approach that addresses legal, operational, and trust-building aspects. By staying informed about evolving regulations, implementing robust governance practices, and prioritising transparency and accountability, organisations can mitigate risks and build trust in their AI models. Proactive risk management is essential for long-term success and sustainability in dynamic landscape of AI.